- December 1, 2020

- by:

- in: Blog

Video has worked the same way for a long, long time. And because of its unique qualities, video has been largely immune to the machine learning explosion upending industry after industry. WaveOne hopes to change that by taking the decades-old paradigm of video codecs and making them AI-powered — while somehow avoiding the pitfalls that

Video has worked the same way for a long, long time. And because of its unique qualities, video has been largely immune to the machine learning explosion upending industry after industry. WaveOne hopes to change that by taking the decades-old paradigm of video codecs and making them AI-powered — while somehow avoiding the pitfalls that would-be codec revolutionizers and “AI-powered” startups often fall into.

The startup has until recently limited itself to showing its results in papers and presentations, but with a recently raised $6.5M seed round, they are ready to move towards testing and deploying their actual product. It’s no niche: video compression may seem a bit in the weeds to some, but there’s no doubt it’s become one of the most important processes of the modern internet.

Here’s how it’s worked pretty much since the old days when digital video first became possible. Developers create a standard algorithm for compressing and decompressing video, a codec, which can easily be distributed and run on common computing platforms. This is stuff like MPEG-2, H.264, and that sort of thing. The hard work of compressing a video can be done by content providers and servers, while the comparatively lighter work of decompressing is done on the end user’s machines.

This approach is quite effective, and improvements to codecs (which allow more efficient compression) have led to the possibility of sites like YouTube. If videos were 10 times bigger, YouTube would never have been able to launch when it did. The other major change was beginning to rely on hardware acceleration of said codecs — your computer or GPU might have an actual chip in it with the codec baked in, ready to perform decompression tasks with far greater speed than an ordinary general-purpose CPU in a phone. Just one problem: when you get a new codec, you need new hardware.

But consider this: many new phones ship with a chip designed for running machine learning models, which like codecs can be accelerated, but unlike them the hardware is not bespoke for the model. So why aren’t we using this ML-optimized chip for video? Well, that’s exactly what WaveOne intends to do.

I should say that I initially spoke with WaveOne’s cofounders, CEO Lubomir Bourdev and CTO Oren Rippel, from a position of significant skepticism despite their impressive backgrounds. We’ve seen codec companies come and go, but the tech industry has coalesced around a handful of formats and standards that are revised in a painfully slow fashion. H.265, for instance, was introduced in 2013, but years afterwards its predecessor, H.264, was only beginning to achieve ubiquity. It’s more like the 3G, 4G, 5G system than version 7, version 7.1, etc. So smaller options, even superior ones that are free and open source, tend to get ground beneath the wheels of the industry-spanning standards.

This track record for codecs, plus the fact that startups like to describe practically everything is “AI-powered,” had me expecting something at best misguided, at worst scammy. But I was more than pleasantly surprised: In fact WaveOne is the kind of thing that seems obvious in retrospect and appears to have a first-mover advantage.

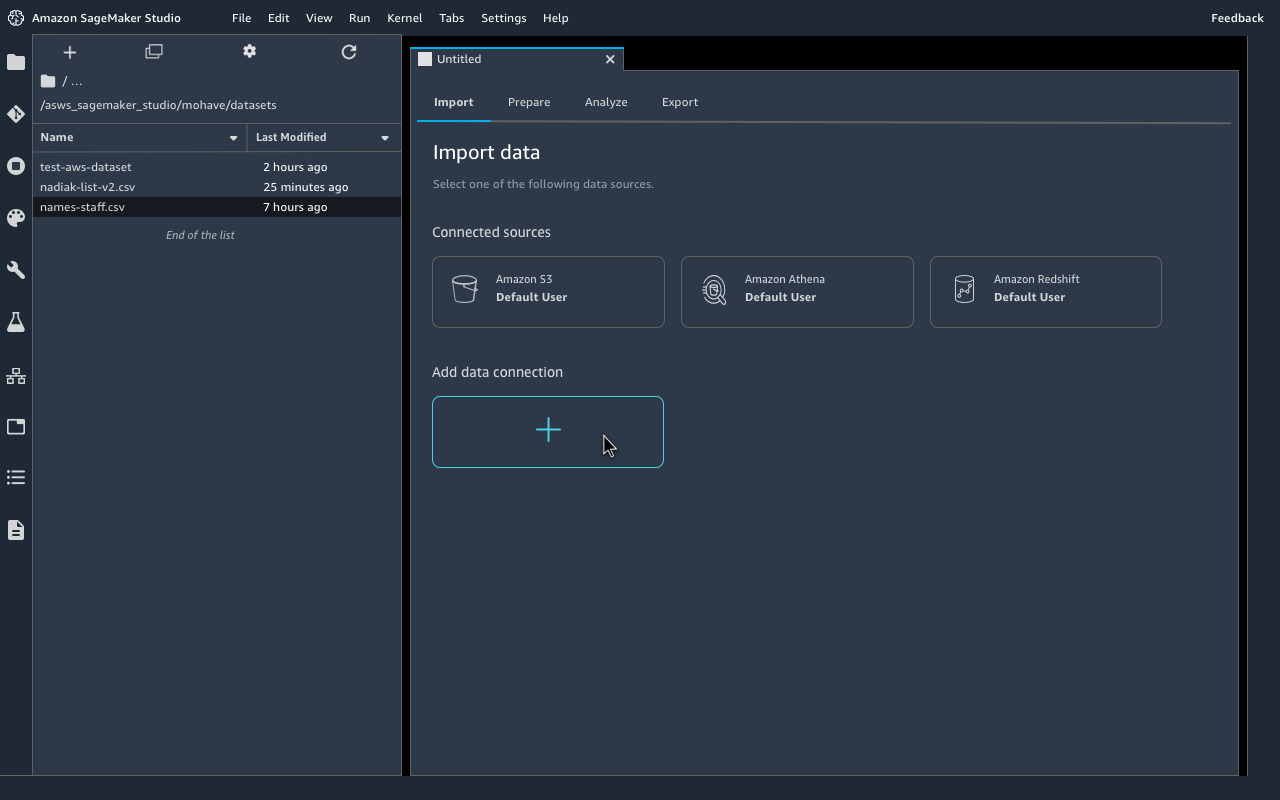

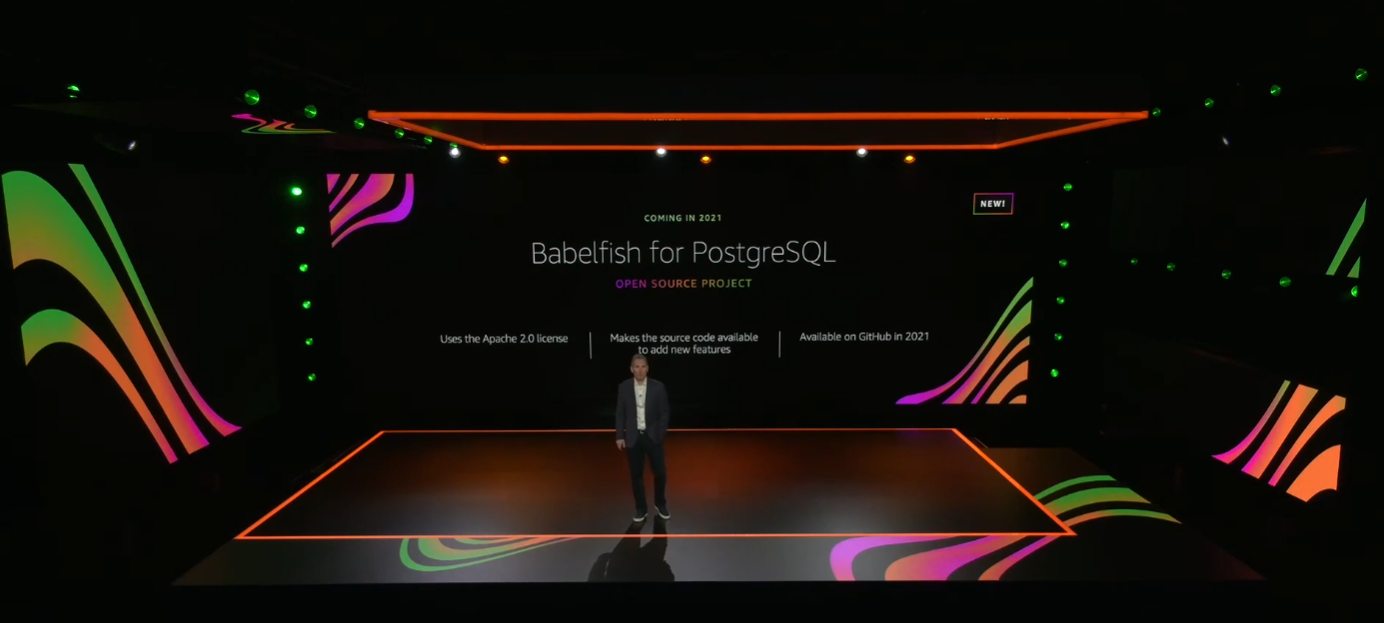

The first thing Rippel and Bourdev made clear was that AI actually has a role to play here. While codecs like H.265 aren’t dumb — they’re very advanced in many ways — they aren’t exactly smart, either. They can tell where to put more bits into encoding color or detail in a general sense, but they can’t, for instance, tell where there’s a face in the shot that should be getting extra love, or a sign or trees that can be done in a special way to save time.

But face and scene detection are practically solved problems in computer vision. Why shouldn’t a video codec understand that there is a face, then dedicate a proportionate amount of resources to it? It’s a perfectly good question. The answer is that the codecs aren’t flexible enough. They don’t take that kind of input. Maybe they will in H.266, whenever that comes out, and a couple years later it’ll be supported on high-end devices.

So how would you do it now? Well, by writing a video compression and decompression algorithm that runs on AI accelerators many phones and computers have or will have very soon, and integrating scene and object detection in it from the get-go. Like Krisp.ai understanding what a voice is and isolating it without hyper-complex spectrum analysis, AI can make determinations like that with visual data incredibly fast and pass that on to the actual video compression part.

Image Credits: WaveOne

Variable and intelligent allocation of data means the compression process can be very efficient without sacrificing image quality. WaveOne claims to reduce the size of files by as much as half, with better gains in more complex scenes. When you’re serving videos hundreds of millions of times (or to a million people at once), even fractions of a percent add up, let alone gains of this size. Bandwidth doesn’t cost as much as it used to, but it still isn’t free.

Understanding the image (or being told) also lets the codec see what kind of content it is; a video call should prioritize faces if possible, of course, but a game streamer may want to prioritize small details, while animation requires yet another approach to minimize artifacts in its large single-color regions. This can all be done on the fly with an AI-powered compression scheme.

There are implications beyond consumer tech as well: A self-driving car, sending video between components or to a central server, could save time and improve video quality by focusing on what the autonomous system designates important — vehicles, pedestrians, animals — and not wasting time and bits on a featureless sky, trees in the distance, and so on.

Content-aware encoding and decoding is probably the most versatile and easy to grasp advantage WaveOne claims to offer, but Bourdev also noted that the method is much more resistant to disruption from bandwidth issues. It’s one of the other failings of traditional video codecs that missing a few bits can throw off the whole operation — that’s why you get frozen frames and glitches. But ML-based decoding can easily make a “best guess” based on whatever bits it has, so when your bandwidth is suddenly restricted you don’t freeze, just get a bit less detailed for the duration.

Example of different codecs compressing the same frame.

These benefits sound great, but as before the question is not “can we improve on the status quo?” (obviously we can) but “can we scale those improvements?”

“The road is littered with failed attempts to create cool new codecs,” admitted Bourdev. “Part of the reason for that is hardware acceleration; even if you came up with the best codec in the world, good luck if you don’t have a hardware accelerator that runs it. You don’t just need better algorithms, you need to be able to run them in a scalable way across a large variety of devices, on the edge and in the cloud.”

That’s why the special AI cores on the latest generation of devices is so important. This is hardware acceleration that can be adapted in milliseconds to a new purpose. And WaveOne happens to have been working for years on video-focused machine learning that will run on those cores, doing the work that H.26X accelerators have been doing for years, but faster and with far more flexibility.

Of course, there’s still the question of “standards.” Is it very likely that anyone is going to sign on to a single company’s proprietary video compression methods? Well, someone’s got to do it! After all, standards don’t come etched on stone tablets. And as Bourdev and Rippel explained, they actually are using standards — just not the way we’ve come to think of them.

Before, a “standard” in video meant adhering to a rigidly defined software method so that your app or device could work with standards-compatible video efficiently and correctly. But that’s not the only kind of standard. Instead of being a soup-to-nuts method, WaveOne is an implementation that adheres to standards on the ML and deployment side.

They’re building the platform to be compatible with all the major ML distribution and development publishers like TensorFlow, ONNX, Apple’s CoreML, and others. Meanwhile the models actually developed for encoding and decoding video will run just like any other accelerated software on edge or cloud devices: deploy it on AWS or Azure, run it locally with ARM or Intel compute modules, and so on.

It feels like WaveOne may be onto something that ticks all the boxes of a major b2b event: it invisibly improves things for customers, runs on existing or upcoming hardware without modification, saves costs immediately (potentially, anyhow) but can be invested in to add value.

Perhaps that’s why they managed to attract such a large seed round: $6.5 million, led by Khosla Ventures, with $1M each from Vela Partners and Incubate Fund, plus $650K from Omega Venture Partners and $350K from Blue Ivy.

Right now WaveOne is sort of in a pre-alpha stage, having demonstrated the technology satisfactorily but not built a full-scale product. The seed round, Rippel said, was to de-risk the technology, and while there’s still lots of R&D yet to be done, they’ve proven that the core offering works — building the infrastructure and API layers comes next and amounts to a totally different phase for the company. Even so, he said, they hope to get testing done and line up a few customers before they raise more money.

The future of the video industry may not look a lot like the last couple decades, and that could be a very good thing. No doubt we’ll be hearing more from WaveOne as it migrates from lab to product.